Introduction

“The most important general-purpose technology of our era is Artificial Intelligence, particularly Machine Learning” – Harvard Business Review, 2017/7.

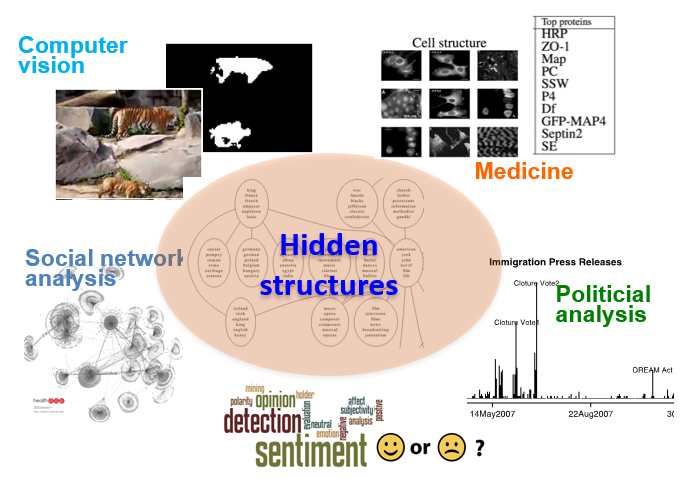

Our research group focuses on some fundamental problems of Machine Learning in general and Deep Learning in particular. We also study to use modern technologies from Machine Learning to other application areas. See the slides here for more detail.

Contact: Assoc. Prof. Than Quang Khoat, Email: khoattq@soict.hust.edu.vn

Research Directions

- Continual learning: Explore new models and methods that can help a machine to learn continually from tasks to tasks, or when the data may come sequentially and infinitely.

- Deep generative models: Explore novel models that can generate realistic data (images, videos, music, art, materials,…). Some recent models include Generative Adversarial Nets (GAN), Variational Autoencoders (VAE), Diffusion probabilistic models (DPM).

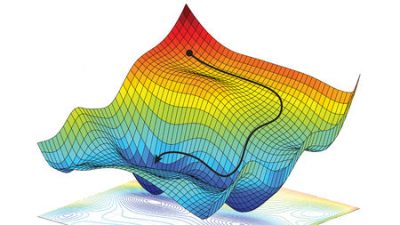

- Theoretical foundation of Deep Learning: Explore why deep neural networks often generalize well, why overparameterized models can generalize really well in practice. Explore conditions for high generalization of machine learning models.

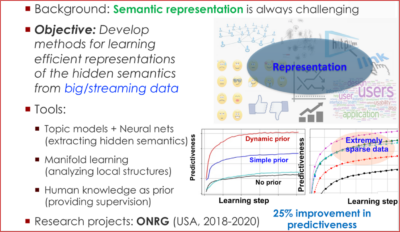

- Representation learning: Explore novel ways to learn a latent representation of data, for which it can boost the performance of different machine learning models in different applications.

- Recommender system: Explore the efficiency of modern machine learning models in recommender systems.

Some Research Problems

- Why does catastrophic forgetting appear and how to avoid it when learning continually from tasks to tasks? What is an efficient way to balance different sources of knowledge?

- Why are noises and sparsity really challenging when working with data streams, for which the data may come sequentially and infinitely? How to overcome those challenges?

- Explore novel models that can generate realistic data (images, videos, music, art, materials,…).

- Why can those generative models generalize well although most are unsupervised in nature?

- Can adversarial models really generalize when different players use different losses?

- Why do deep neural networks often generalize well?

- Why do deep neural networks often suffer from adversarial attacks or noises? What are the fundamental roots and how to overcome them?

- Why can overparameterized models generalize really well in practice?

- What are the necessary conditions for high generalization of machine learning models?

- What are the criteria for ensuring that a learnt latent representation of data is good? What are good criteria for learning new latent space?

- Does self-supervised learning really generalize?

- Why extreme sparsity is an extreme challenge in recommender systems? How to efficiently deal with sparsity?

- Can modeling high-order interactions between users and items help improve the effectiveness of recommender systems?

- Can modeling sequential behaviors of online users help improve the effectiveness of recommender systems?

Team Members

Projects and Solutions

Latest Publications

Publications in 2025

- Lichuan Xiang, Quan Nguyen-Tri, Lan-Cuong Nguyen, Hoang Pham, Khoat Than, Long Tran-Thanh, Hongkai Wen. DPaI: Differentiable Pruning at Initialization with Node-Path Balance Principle. International Conference on Learning Representations. Singapore. 24/04/2025

- Quyen Tran, Tung Lam Tran, Khanh Doan, Toan Tran, Dinh Phung, Khoat Than, Trung Le. Boosting Multiple Views for pretrained-based Continual Learning. International Conference on Learning Representations. Singapore. 24/04/2025

Publications in 2024

- Thanh-Thien Le, Viet Dao, Linh Nguyen, Thi-Nhung Nguyen, Linh Ngo Van, Thien Nguyen. SharpSeq: Empowering Continual Event Detection through Sharpness-Aware Sequential-task Learning. Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. 3632–3644. 16/06/2024

- Sikandar Ali Qalati, Domitilla Magni, and Faiza Siddiqui. Senior Management's Sustainability Commitment and Environmental Performance: Revealing the Role of Green Human Resource Management Practices.. Business Strategy and the Environment. 02/08/2024

- Thanh-Thien Le, Manh Nguyen, Tung Thanh Nguyen, Linh Ngo Van, Thien Huu Nguyen. Continual Relation Extraction via Sequential Multi-Task Learning. Proceedings of the AAAI Conference on Artificial Intelligence. 18444-18452. 22/02/2024

- T. K. Lai, and I. L. Ngo. A new design and optimization of VD-ECF micro-pump: Advancements in electrohydraulic performance. Physics of Fluids. 29/07/2024

- Tung Nguyen, Tung Pham, Linh Ngo Van , Ha-Bang Ban , Khoat Than. Out-of-vocabulary handling and topic quality control strategies in streaming topic models. Neurocomputing. 128757. 19/10/2024

- T. K. Lai, and I. L. Ngo. An investigation on the thermo-electrohydraulic performance of novel ECF micro-pump.. International Journal of Heat and Mass Transfer. 29/09/2024

- Cuong Quoc Dang, Dung Trung Nguyen, Dat Ha Mai, Duc Van Vu, Anh Ngoc Hoang, Uyen Thu Nguyen, Thiep Van Nguyen, Tan Xuan Phan, Tung Phong Doan, and Cuong Dinh Hoang. From Synthesis to Realism: Enhancing Logistics with Computer Vision and Domain-Adversarial Training. ICIIT '24: Proceedings of the 2024 9th International Conference on Intelligent Information Technology. 327-333. Hồ Chí Minh City, VIet Nam. 23/02/2024

- Sikandar Ali Qalati, MengMeng Jiang, Samuel Gyedu, and Emmanuel Kwaku Manu. Do Strong Innovation Capability and Environmental Turbulence Influence the Nexus Between Customer Relationship Management and Business Performance?. Business Strategy and the Environment. 02/07/2024

- T. K. Lai, K. D. Tran, and I. L. Ngo. A numerical study on the thermo-electrohydrodynamic performance of ECF micro-pumps. Sustainability and Emerging Technologies for Smart Manufacturing. 29/04/2024

- T. K. Lai, and I. L. Ngo. An investigation on the electrohydraulic performance of novel ECF micro-pump with NACAshaped electrodes. Theoretical and Computational Fluid Dynamics. 29/02/2024

- Hoàng Thanh Hải, Thân Quang Khoát. A DEEP LEARNING APPROACH FOR CREDIT SCORING. TNU Journal of Science and Technology. 14/05/2024

- Duy-Tung Pham, Thien Trang Nguyen Vu, Tung Nguyen, Linh Ngo Van, Duc Anh Nguyen, Thien Huu Nguyen. NeuroMax: Enhancing Neural Topic Modeling via Maximizing Mutual Information and Group Topic Regularization. the 2024 Conference on Empirical Methods in Natural Language Processing. 7758–7772. 11/11/2024

- Tung Tran, Khoat Than, Danilo Vargas. Robust Visual Reinforcement Learning by Prompt Tuning. ACCV 2024. Lecture Notes in Computer Science.. 387–401. Hanoi. 08/12/2024

- Ha An Le , Trinh Van Chien , Van Duc Nguyen†,Wan Choi. Channel Analysis and End-to-End Design for Double RIS-Aided Communication Systems with Spatial Correlation and Finite Scatterers. IEEE Conference on Global Communications (GLOBECOM). 1561-1566. 03/08/2023

- Tinh Luong, Thanh-Thien Le, Linh Ngo, Thien Nguyen. Realistic Evaluation of Toxicity in Large Language Models. Association for Computational Linguistics ACL 2024. 1038–1047. 11/08/2024

- JYE Tin, WW Tan, AA Bakar, MS Mahali, FF Lothai, NF Mohammad, SSA Hassan & KF Chin. A Conceptual Design of Sustainable Solar Photovoltaic (PV) Powered Corridor Lighting System with IoT Application. ICREEM 2022. 09/03/2024

- Quyen Tran, Nguyen Xuan Thanh, Nguyen Hoang Anh, Nam Le Hai, Trung Le, Linh Van Ngo, Thien Huu Nguyen. Preserving Generalization of Language models in Few-shot Continual Relation Extraction. Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing. 13771–13784. 11/11/2024

- Hieu Man, Chien Van Nguyen, Nghia Trung Ngo, Linh Ngo, Franck Dernoncourt, Thien Huu Nguyen. Hierarchical Selection of Important Context for Generative Event Causality Identification with Optimal Transports. Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024). 8122–8132. Torino, Italy. 20/05/2024

- Tung Doan, Tuan Phan, Phu Nguyen, Khoat Than, Muriel Visani, Atsuhiro Takasu. Partial ordered Wasserstein distance for sequential data. Neurocomputing. 127908-127925. 19/05/2024

- Viet Nguyen, Giang Vu, Tung Nguyen Thanh, Toan Tran, Khoat Than. On inference stability for diffusion models. AAAI Conference on Artificial Intelligence. Canada. 22/02/2024

- Viet Dao, Van-Cuong Pham, Quyen Tran, Thanh-Thien Le, Linh Ngo Van, Thien Huu Nguyen. Lifelong Event Detection via Optimal Transport. Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing. 12610–12621. 12/11/2024

Publications in 2023

- Nguyễn Đức Ca, Phan Thị Thu, Hoàng Thị Minh Anh, Phạm Ngọc Dương, Nguyễn Hoàng Giang, Nguyễn Lệ Hằng. Nâng cao hiệu quả quản trị đại học trong bối cảnh đổi mới giáo dục tại Việt Nam. Tạp chí khoa học giáo dục Việt Nam. 14/03/2023

- Sikandar Ali Qalati, Sonia Kumari, Kayhan Tajeddini, Namarta Kumari Bajaj, and Rajib Ali. Innocent devils: The varying impacts of trade, renewable energy and financial development on environmental damage: Nonlinearly exploring the disparity between developed and developing nations. Journal of Cleaner Production. 02/02/2023

- Huy Huu Nguyen, Chien Van Nguyen, Linh Ngo Van, Luu Anh Tuan, Thien Huu Nguyen. A Spectral Viewpoint on Continual Relation Extraction. Conference on Empirical Methods in Natural Language Processing. 9621-9629. 06/12/2023

- Wu Qinqin, Sikandar Ali Qalati, Rana Yassir Hussain, Hira Irshad, Kayhan Tajeddini, Faiza Siddique, Thilini Chathurika Gamage. The effects of enterprises' attention to digital economy on innovation and cost control: Evidence from A-stock market of China. Journal of Innovation & Knowledge. 02/12/2023

- Ha An Le, Trinh Van Chien, Van Duc Nguyen, Wan Choi. Double RIS-Assisted MIMO Systems Over Spatially Correlated Rician Fading Channels and Finite Scatterers. IEEE Transactions on Communications. 4941-4956. 13/05/2023

- Chien Van Nguyen, Linh Van Ngo, and Thien Huu Nguyen. Retrieving Relevant Context to Align Representations for Cross-lingual Event Detection. Association for Computational Linguistics: ACL 2023. 2157–2170. Canada. 09/07/2023

- Sikandar Ali Qalati, Belem Barbosa, and Blend Ibrahim. Factors influencing employees’ eco-friendly innovation capabilities and behavior: the role of green culture and employees’ motivations. Environment, Development and Sustainability. 02/10/2023

- Thi Hong Vuong, Tung Doan and Atsuhiro Takasu. Deep Wavelet Convolutional Neural Networks for Multimodal Human Activity Recognition Using Wearable Inertial Sensors. Sensors. 9721-9743. 05/12/2023

- Nam Le Hai, Trang Thien, Linh Ngo Van, Thien Nguyen, Khoat Than. Continual variational dropout: a view of auxiliary local variables in continual learning. Machine Learning. 281-323. 04/11/2023

- Tran Xuan Bach, Nguyen Duc Anh, Ngo Van Linh, Khoat Than. Dynamic Transformation of Prior Knowledge Into Bayesian Models for Data Streams. IEEE Transactions on Knowledge and Data Engineering. 3742-3750. 23/12/2021

- H.T. Vuong, A. Takasu, T.P. Doan. Deep Sensor-fusion Approach to Vehicle Detection on Bridges using Multiple Strain Sensors. The International Symposium on Life-Cycle Civil Engineering (IALCCE). 1935-1942. Milan, Italia. 02/07/2023

- Duy-Tung Nguyen , Duc-Manh Nguyen , Dinh-Tan Pham , Khoat Than , Hong-Thai Pham , Hai Vu. Bayesian method for bee counting with noise-labeled data. 12th International Symposium on Information and Communication Technology. 401–408. Ho Chi Minh City. 07/12/2023

- Sikandar Ali Qalati , Belem Barbosa & Shuja Iqbal. The effect of firms’ environmentally sustainable practices on economic performance. Economic. Economic Research-Ekonomska Istraživanja. 02/06/2023

- Lam Tran, Viet Nguyen, Phi Nguyen, Khoat Than. Sharpness and Gradient Aware Minimization for Memory-based Continual Learning. International Symposium on Information and Communication Technology (SOICT). 189–196. 07/12/2023

- Khang Nguyen, Kien Do, Truong Vu, Khoat Than. Unsupervised image segmentation with robust virtual class contrast. Pattern Recognition Letters. 10-16. 07/07/2023